Finding the Right Plan

Seravo’s premium hosting and upkeep includes everything you need for a secure and fast WordPress website. Each hosting plan for WordPress includes an optimized server environment, the upkeep of one domain (or more), automatic backups, tested updates – and much more! Various plans are available for websites of all sizes. Every plan also has a designated amount of disk space and monthly HTTP requests.

The service also adapts to traffic: whenever there’s more web traffic on a site, new processes are created to serve the site’s needs. As the architecture is based on clusters in which several physical machines host the site, it is guaranteed that your WordPress site will continue to perform optimally even under growing demand. This solution is why we monitor the amount of HTTP requests each month, and why it is the basis for our pricing.

The WP Pro is our basic plan, meeting the needs of most small or medium-sized WordPress sites. If your site is an e-commerce site or has WooCommerce installed, the WP Business plan is the right choice. WP Corporate is our premium plan, aimed at high-traffic websites. A closer look at the features of each plan can be seen on our list of prices.

Every website hosted at Seravo also has a pre-installed Seravo Plugin, which shows information about the resources used by your site. A report of HTTP requests is generated each month and can be examined in the plugin (Site status). If the amount of HTTP requests exceeds the quota, it’s time to upgrade the plan to a more suitable one.

No Hosting Overage Charges at Seravo

Even if the amount of requests is monitored and examined by Seravo, we will never ask for additional charges even if the quota is momentarily exceeded – nor will we block access to your site even if there’s suddenly more traffic on your site. The performance and availability of your site is our top priority in all situations!

What Are HTTP Requests, Anyway?

Websites are formed client-side within the borders of a browser window – the browsers make requests, and servers answer them. As a visitor loads the front page of a website, their browser asks for the files needed to form the website. These include all types of files: images, style sheets or scripts, for example. If there are 40 different files to be downloaded on the front page, this means one pageload generates 40 individual HTTP requests.

The amount of files downloaded on one pageload and thus the amount of HTTP requests may be decreased if the website’s full-page HTTP cache works. When properly cached, a new pageload won’t generate the same 40 requests again, but maybe only 4 or 5 additional requests as most of the content has been temporarily stored by the visitor’s web browser.

When the amount of HTTP requests stays moderate, it means the site will also perform better. It’s a good idea to keep track of the site’s resources and examine loading times with a speed test if the site doesn’t seem to be loading properly. Speed optimization may offer some tips and tricks which may also lessen the amount of requests. Keep reading to find out how to decrease the amount of HTTP requests on your site – but you might also want to check out our expert tips for getting started with speed tests and speed optimization!

The Good, the Bad and the Bots

As we know, all web traffic on your site isn’t caused by humans as it is also frequented by bots. For example, a web crawler or a spider also crawls your site and indexes its contents. This way your site will be categorized and it becomes more visible in various search engines, such as Google or Bing. Problems can occur however if several bots arrive to scour the site simultaneously. With the help of site analytics harmful traffic can however be recognized.

The more visited and cross linked your site is in general, the more interesting it is to bots who want to go through your site’s contents. As they have to keep track of updates and changes, they unfortunately come back to scan the site ever so often. Changes can be made to robots.txt file in order to give them rules for scanning, such as whether or not they are allowed to come and take a look – but bad bots especially may decide to ignore them. Even “nice” and useful bots may react very slowly to any changes in robots.txt, so changing the rules on the fly does not offer immediate relief.

It isn’t always feasible to block search engine bots altogether, but they can also be asked to slow down the crawling by making sure that a crawl-delay has been defined in robots.txt. This value tells a bot the frequency of individual requests it is allowed to make on a site. Googlebot however must be controlled via Search Console. It is more efficient to disallow search engine bots that don’t bring any relevant traffic to your site.

Seravo also filters harmful traffic on sites, and our 24/7 monitoring will step in if a problem is detected. However, it can’t always be easily determined if traffic is welcomed exposure or excess scanning.

How Can I Reduce HTTP Requests on My WordPress Site?

Speed optimization can also reduce the amount of HTTP requests, as the goal is to decrease the amount of resources needed on a site to load it. Less HTTP requests are generated on your site when less images, scripts or style sheets are loaded in order to view it. Before trying to cut down the number of requests, we recommend taking a look at the GoAccess reports to find out which page gets the most requests.

Removal of unneeded, unused plugins is always a good idea on any WordPress site. Plugins may use their own stylesheets or scripts that add to the amount of requests done on a single pageload. By reducing the number of plugins you will simultaneously make your WordPress safer, as there will be less opportunities for a security flaw in the plugins’ code.

Lazy loading makes your site faster, but also potentially reduces the amount of requests – the image won’t be loaded until it is shown to the visitor in the browser. Read our tips for lazy loading here!

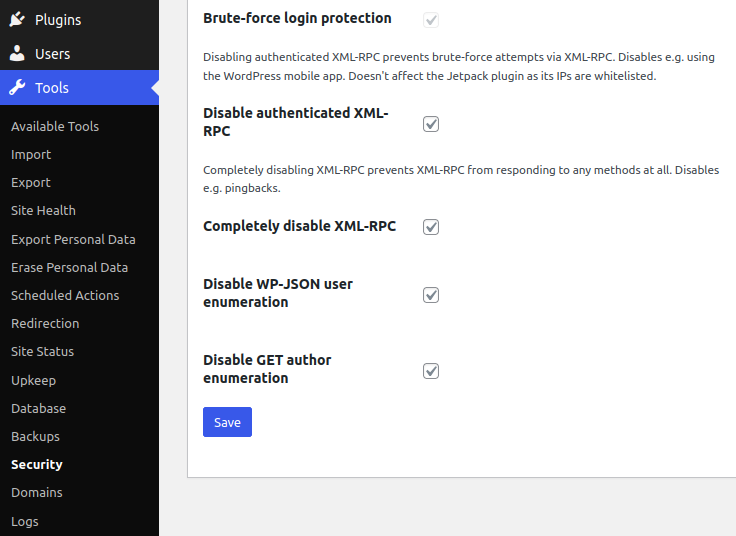

A certain file gets the most attention from harmful bots, and it’s called xmlrpc.php. The purpose of this file is to allow remote access to a WordPress installation, but it can also be used nefariously in a brute force attack towards the site. WordPress supports XML-RPC until its own API takes over, but for now the support is here to stay. Seravo offers an easy way to block the access to xmlrpc.php altogether: just log in to your WordPress site and look for the menu Tools > Security > “Completely disable XML-RPC”. Read more about Seravo Plugin’s security settings on our knowledge base.

If the site is getting a lot of unwanted traffic from certain countries of IP addresses, it may be tempting to limit their access by implementing a geoblock on a site. However, the problem is that also welcomed traffic may be prevented by the configuration. Keep in mind that IP addresses can also be quite easily changed!

WordPress Service That Adapts

When your site is hosted at Seravo, the amount of HTTP requests is examined over a period of time. Our sales team and customer service will have a look at the resources used by a site and contact you if a need to upgrade your site’s plan is apparent.

Please contact us to discuss your plan or any other matter regarding your site. We’d be happy to help you by email at help@seravo.com, or you can chat with us!