Cache is one of those things you often hear about but might never see explained anywhere. We decided it was time to write a quick guide about the different levels of caching that exist out there.

Caching Makes Your Site Ten Times Faster

Simply put, caching is a very common and useful technique that can help serve requests up to ten times faster than without caching involved. The way it works is that the cache stores the answer to a query for a short time. If the same query is run again, there’s no need to do any complex calculations or run any resource-intensive processes to get the answer – the cache simply returns the same answer it stored when the query was previously answered.

The beauty of caching, however, is that you can safely empty or destroy it at any time since the data is never truly lost as a result of cache purging. Instead, it just needs to be re-fetched or calculated from its original source. No data is lost but it does take more time and effort when compared to serving the same data from the cache.

What is Caching?

A common example of cache in action is when you browse the internet and end up on, for example, this excellent Wikipedia page about caching. When you visit the page for the first time, the site is loaded from the server to your browser in its entirety. Then you decide to leave the page to visit the Seravo Developer Documentation to see if we’ve included HTTP caching in our default NGINX configuration (fear not, we have!).

After satisfying your curiosity you decide to return to the Wikipedia article you were previously browsing. Now, instead of the server serving the whole website to your browser over the internet, your browser shows you the page directly from its memory, as the browser has cached the page locally. Depending on the size of the page, the increase in load times granted by browser caching can be significant, with local cache serving the same page in milliseconds compared to the few seconds it takes to load the page again from the server.

Whether or not a page is cached by the browser is controlled by the site by passing on header information on the initial page load. This header information often contains expiry instructions, such as date and time, to let the browser know how long the cached page is valid and when it should be refreshed from the server. We’ll get to these headers from a site developers perspective later, as they are quite vital in ensuring the proper functionality of a site.

Common Levels of Caching

Caching is actively used in a variety of different levels and applications, such as software, operating systems, device drivers, and even inside CPUs. The term L1, L2 and L3 memory might ring a bell for those who have assembled their own PCs, as the size and type of the processor’s cache are detailed in the product specification alongside clock speed and socket type. The larger the cache size, the faster the processor can handle computing, due to the less frequent need for retrieving data from the Random-access Memory, or RAM. That’s why we use professional-grade server hardware, including CPUs with generous multi-tiered memory capable of handling demanding computing tasks and high loads.

Hard drives are another common piece of hardware that do built-in caching for improved performance. The disk cache, or disk buffer, is controlled by a microcontroller and it stores data that can be accessed faster than reading it directly from the main storage of the hard drive. This improvement in speed is especially noticeable with the more traditional HDD drives, but SSDs have a built-in cache as well. The performance difference between HDDs and SSDs in itself is significant enough so that despite the caching, an HDD can never outperform an SSD, let alone a newer PCIe SSD. That is why we exclusively use SSDs and PCIe SSDs in our server infrastructure.

Because the cache is used to make things faster, it makes sense for the place where the cache is stored to be, well, faster. These faster memory types used to store the cached data are often smaller than the actual storage memory for the data, and a lot more expensive as well. This is also why a computer might have its storage space measured in terabytes, but its working memory (RAM) is measured in gigabytes. RAM is much faster than any SSD, but it’s significantly more expensive, often costing around 7-8€ per gigabyte. If you’d want to replace your 120€ 1TB SSD drive with RAM, you’d end up paying around 7500€ for that luxury.

RAM has numerous use cases as the working memory of the computer, with different operating systems having varied methods and approaches for its utilization, the goal is always the same – faster performance. Linux, the operating system of choice for our server hardware, automatically uses all the available RAM as the cache for the storage drives mounted to the system. This behavior reduces the load experienced by the drives themselves, resulting in fewer cases of I/O load causing performance issues. The same principles also apply to software that you run on your computer. The software uses RAM to store frequently used bits of data in order to reduce the load on the hard drives.

WordPress is more often than not also directly influenced by the performance boost of these caching mechanisms due to servers having professional-grade versions of the same hardware used to build PCs. The higher the quality of the hardware, the better the performance of the WordPress site hosted on that server. But it’s not that simple in real life, because we can use caching to speed up the software that runs on the hardware, which is used to server those WordPress sites.

Let’s take a look at how we use the server software to cache WordPress.

The Crucial Caches of WordPress

The important caches for WordPress users are the ones that affect the handling of files, such as PHP and image files, the database functionality, the operations of the built-in WordPress cache, and the caches between WordPress, the HTTP Server and the browser. We’ve set up performance optimizing caching functions for all these different layers of the cake called WordPress caching. These optimizations are in use by default, but can be disabled by the customer as needed.

PHP Cache

We’ve implemented PHP in a way that there are always PHP processes ready to serve requests and the caching has been configured to be active out of the box. Our PHP caching includes all PHP files from WordPress itself, the plugins, the themes, and even all the PHP libraries. This way there’s no need for any disk reading when a request comes in: it’s served directly from the PHP cache.

If there are changes or updates made to the site or the WordPress core, the cache is programmed to reset and load the latest version that includes the changes in the PHP files automatically.

MariaDB Database Cache

If WordPress is acting sluggish, the reason is often a slow database. A slow database on the other hand is often the end result of an unnecessarily large amount of requests or cross references. Good SQL queries and correct indexing of tables and data are important for a fast and optimized database, but you can also achieve speed increases without altering the code of your WordPress site. No surprises here, this is done by caching the answers to the most frequently used database queries in the RAM.

WordPress Transients and Redis Cache

WordPress itself has a built-in caching mechanism that developers can utilize by using the Transients API functions. An example use case of transients would be a list of ten most popular articles on a site. Fetching the list over and over again on every page load for each and every visitor will be an extremely strenuous operation. With the help of transients, the result of the top ten list calculation can, for example, be cached

Regular WordPress installations store transients in the database, where the calculated values are fetched when requested. However, we don’t use the database as storage for transients. We use a dedicated Redis cache instead to store the transients within RAM for an even faster and less straining serving experience when PHP processes request the data.

Reverse Position HTTP Caching System

A fancy name for what is essentially a web cache that runs on our servers instead of the visitor’s browser and provides near-instant access to websites that have been entirely stored in the cache. Some sites receive updates on an hourly basis, or even more frequently in some extreme cases, and in these types of

When a site stays the same for a longer period of time, the HTTP cache allows for the site to be served without having to execute any PHP code. The site is often stored between 10 minutes to an hour. Our HTTP servers always inspect the header information emitted by the PHP processes when printing the HTML page and cache the data they serve when allowed to do so.

HTTP caching with the reverse position system can easily result in a ten fold speed boost for the site, because no PHP processes are run and no database queries made during the page load.

Forward Position HTTP Caching System

Forward cache in turn is the web cache that is outside of our servers, in this case the visitor’s browser. Modern browsers read the same HTTP headers to determine whether or not they are allowed to cache the site. If there are no HTTP headers restricting caching, the browser will use the opportunity to cache the website, at least for the duration of the browsing session in question.

When utilized correctly, browser caching can increase load speeds infinitely, because some page loads aren’t done at all, but are served from the browser’s cache instead.

So called static files, such as images and JavaScript files, are automatically set to be cached by Seravo’s default headers to ensure proper caching. Dynamic content generated by WordPress and PHP needs to be controlled on a per-site basis to ensure correct caching, meaning that the developer needs to control the HTTP headers the site emits.

How to Control Caching

The fastest page load is one where the page is not even loaded again over the network, but is served from cache instead, which is why learning how HTTP headers work and how they can be used to control caching is extremely useful for any WordPress developer. Examples of HTTP headers used to control caching include headers like Cache-control and Expires. Both Mozilla and Wikipedia have excellent resources from which to study a more complete list of headers and how to use them.

Developers working on a site hosted with us can make use of our command line tools, including wp-check-http-cache, which tests to see if there are HTTP headers on the site that allow caching.

$ wp-check-http-cache

----------------------------------------

Seravo HTTP cache checker

----------------------------------------

Testing https://seravo.com/...

Request 1: HIT

Request 2: HIT

Request 3: HIT

----------------------------------------

SUCCESS: HTTP cache works for https://seravo.com/.

----------------------------------------

You can also test this yourself by running:

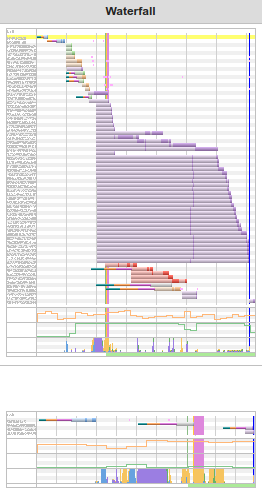

curl -IL https://seravo.com/By using the command wp-speed-testyou can test the page load times, and adding the flag --cache shows the HTTP cached version of those same page loads.

$ wp-speed-test --cache

Testing speed URL https://seravo.com...

Warning: invoked with --cache and thus measuring cached results. This does not measure actual PHP speed.

For an explanation of the different times, please see docs at https://curl.haxx.se/docs/manpage.html

URL TOTAL NAMELOOKUP CONNECT APPCONNECT PRETRANSFER STARTTRANSFER = AVG

https://seravo.com 0.010 0.004 0.005 0.008 0.008 0.010 0.010

https://seravo.com 0.002 0.000 0.000 0.000 0.000 0.002 0.006

https://seravo.com 0.002 0.000 0.000 0.000 0.000 0.002 0.005Many people are familiar with the phrase “empty your browser cache” phrase. In effect, it means asking your browser to bypass the cache and ask for a fresh HTML file from the server. A handier way of making sure the page you see is loaded from the server is to press Ctrl+F5 when using Chrome or Firefox. Our servers know when a user uses the bypass cache combination based on the HTTP headers including the Pragma: no-cache

Controlling Caching is Not Easy

Using caching efficiently on websites is often very challenging. Majority of the data and pages out there could be cached all the time, but there are always exceptions. For example, a website showing election results could show data that is cached for an hour for most of the year and still be relevant and useful to visitors. However, when the latest election results come in, visitors want to see the latest results immediately, not an hour later.

Controlling all types of caching, including the HTTP cache, is difficult. When an expiration time has been set to an hour, the visitor’s browser won’t ask for the latest changes inside that hour, as the expiration time cannot be changed on the fly. The change to the HTTP headers shortening the cache expiration time will not be relayed to the visitor unless they bypass their browser cache from their own initiative. Server-level caching can be purged in a matter of seconds whenever necessary, but we cannot access the browsers of individual users, we just need to wait for their cache to expire and for the browser to make a new request. That’s when the new headers can be passed on to the visitor’s browser.

Due to the trickiness of caching, there is a very popular saying in computer science that states “there are only two hard things in computer science: cache invalidation, naming things, and off-by-1 errors.”

To Cache or Not to Cache, That’s the Question

Caching is, despite it’s massive advantages, not always recommended. Imagine an online shop that would use too aggressive of a cache. The cart would not show the new items the user adds to it, and in the worst case scenario one logged in user’s information could be served to a completely different user due to caching. WooCommerce and other eCommerce platforms automatically set cookies to prevent HTTP caching of pages for logged in users to prevent these types of accidents.

At its best caching, when done correctly, can make a website load over ten times faster. Small optimizations can easily improve the speed by ten or even twenty percent. That’s why caching is such a popular topic, despite the difficulties experienced by most developers when trying to figure out how to use it efficiently. But it’s worth the effort, and not just because of the increased speed. If caching allows for less requests to be passed on to the server and the database, we’re getting more done while consuming less energy. The nature thanks you, and so do your site’s visitors.

Comments

2 responses to “Make Your Site Faster with Caching”

How to disable cache fully and install own cache plugin?

HTTP cache can be disabled by adding no-cache headers to your page, and object cache can be turned off by disabling Redis, although this is not recommended. Some caching plugins may be useful, but we recommend always testing your site’s performance before and after activating them! 🙂

More info on knowledge bank: https://help.seravo.com/article/304-cache-restrictions